How to Prioritize Features for a Lean Custom SaaS MVP

Short Summary

This blog explains how to prioritize features for a lean custom SaaS MVP so teams can validate ideas faster without blowing timelines or budgets. It shows how to focus on a single business hypothesis, avoid common MVP traps like feature creep and over-engineering, and use simple prioritization frameworks to decide what truly matters for the first release. With step-by-step guidance, real examples from healthcare, mortgage, and education, and practical checklists, the post emphasizes building only the features needed to test value, gathering early user feedback, and scaling thoughtfully after validation.

Building a custom SaaS platform is exciting and terrifying at the same time. You have a vision, a market, and probably a dozen features you think are critical. But if your goal is lean validation and fast time to market, you can’t build everything at once. You need to choose the right things to build first.

I’ve worked with founders and CTOs in healthcare, mortgage, and education who thought every feature was a must-have. Then we forced discipline. The result was faster launches, clearer user feedback, and lower cost. In many cases teams cut MVP build time by 40 to 50 percent. That’s real time and money saved.

This post walks you through practical ways to prioritize features for a custom SaaS MVP. No fluff. No magic formulas. Just steps you can use today to decide what to build, how to validate it, and how to avoid common traps that blow up timelines and budgets.

Why prioritizing features matters

When you’re building for regulated verticals like healthcare or mortgage, the pressure to over-engineer is real. Compliance, integrations, and data security feel like reasons to add more features early. I get it. But piling on complexity before you validate the core value is how projects stall and budgets swell.

Prioritization gives you a few things at once:

- Faster time to market

- Earlier user feedback on what actually matters

- Lower initial build and maintenance costs

- A clearer roadmap for sensible, staged investment

Think of an MVP as the smallest thing that proves your product hypothesis. If you’re not clear on which hypothesis you want to test first, you'll spend time building features that produce no answers.

What is a minimum viable product? A quick refresher

In plain terms, a minimum viable product is the smallest set of features that lets you test a business assumption. That assumption might be: will clinics schedule more visits if we automate reminders, or will loan officers close more deals with an AI-assisted underwriting tool?

People confuse MVP with a low-quality product. They are not the same. An MVP must be usable, reliable, and secure enough for real users. Especially in regulated industries you need to think about compliance and data privacy up front, but that does not mean building every feature from day one.

Ask yourself two questions to scope an MVP:

- What is the single business outcome I need to validate first?

- Which smallest set of features will produce measurable evidence for that outcome?

Answering those two will stop feature creep before it starts.

Common mistakes I see founders make

Here are the traps that kill MVP speed and increase cost. I’ve seen most of these in real projects.

- Trying to build everything at once. The list is endless, but your time and money are not.

- Confusing “nice to have” with “must have.” If it doesn’t change a user decision, it’s often optional for MVP.

- Over-designing UX for the first release. Good UX matters, but you don’t need polished micro-interactions to test demand.

- Underestimating integrations. Every external API can add unknown time. If an integration isn’t essential to the hypothesis, fake it or remove it.

- Neglecting compliance early. For healthcare or finance, skipping privacy and security planning is a false economy.

One quick example: a client in healthcare wanted full EHR integration for launch. We asked what would prove the product’s value. It turned out automated appointment reminders and a provider dashboard were enough to validate demand. We launched without EHR integration, tested the hypothesis, and then prioritized integration once the metric moved. Had we tried to integrate the EHR first, the project would have doubled in time and cost.

Simple frameworks that actually help

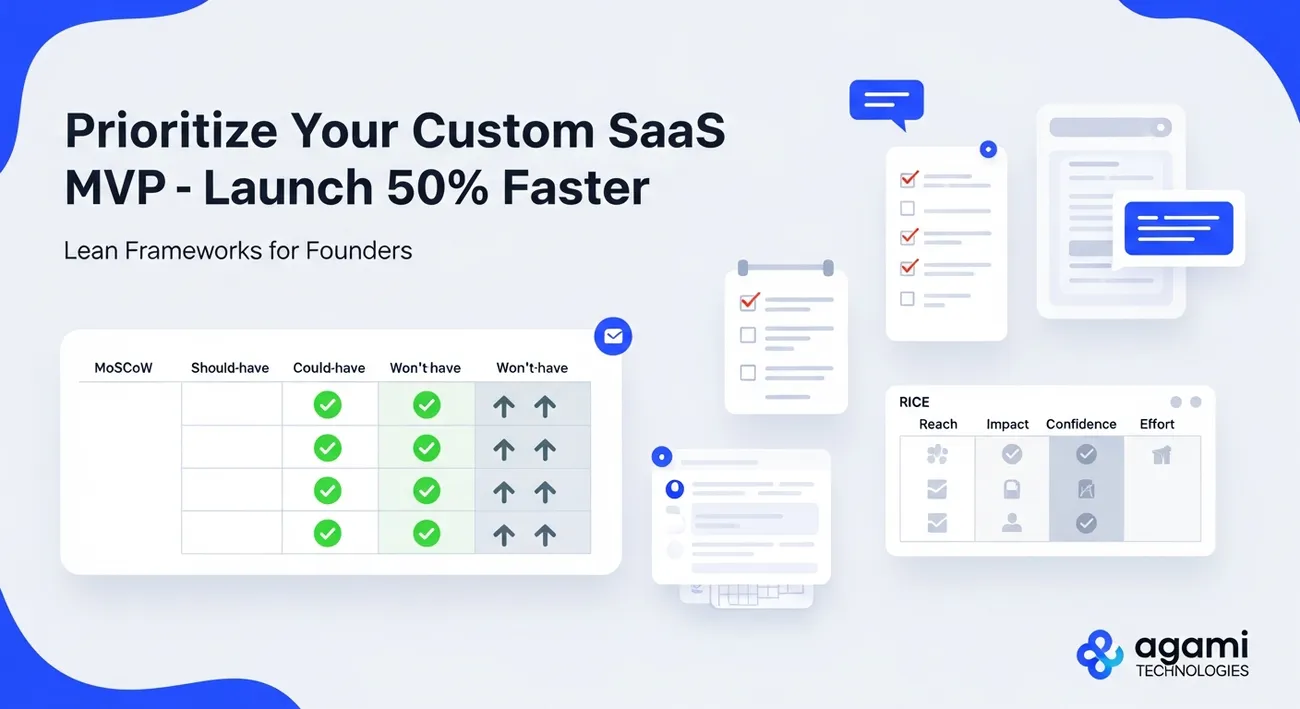

Frameworks give you a language to compare features, not a recipe that guarantees success. I use lightweight versions of familiar methods because heavyweight scoring systems rarely survive reality checks.

Here are three easy approaches you can apply right away:

1) Start with the outcome

List features by the outcome they influence. Outcomes are simple things like signups per week, time to decision, or retention after 30 days. Rank each feature by expected impact on that outcome and confidence in your assumption.

Example: If your goal is faster loan approvals, a feature that automates document collection probably moves the needle more than a pretty applicant dashboard.

2) Use a reduced RICE score

I simplify RICE to three parts: Reach, Impact, Confidence. Multiply them, but keep the scale small, like 1 to 5 for each. That produces a quick prioritization number without false precision.

Reach: how many users does this affect? Impact: how big is the effect? Confidence: how sure are we about the impact? Features with low confidence but high impact are good candidates for experiment mode, not full build.

3) The must/should/could/won’t split

MoSCoW in a trimmed form. Ask: which features are must-haves to validate the hypothesis? Which are should-haves for MVP polish? Which could wait? Which we explicitly won’t build now?

This creates an easy scope boundary and helps stakeholders accept trade-offs.

Step-by-step: Prioritizing features for a lean custom SaaS MVP

Below is a practical process I use with teams. You can do this in a few workshops and finish a prioritized backlog in a week.

- Define the core hypothesis

Start with a one-sentence hypothesis. Keep it actionable. For example: "If we allow therapists to automate appointment reminders, no-show rates will drop 20 percent within 60 days." That’s a testable goal.

- Map the user journey

Sketch the simplest path that proves the hypothesis. Don't document every edge case. Focus on the steps a user takes to produce the outcome. For the therapy example, the journey might be: provider signs up, imports schedule, turns on reminders, monitors no-shows.

- List features tied to each step

Write down only the features that are required to make that journey happen. If a feature does not enable one of those steps, it’s probably out for the MVP.

- Score and split

Use outcome mapping, reduced RICE, or MoSCoW to categorize features into must, should, could, won't. Be ruthless. I promise your product will survive without the "could" list for now.

- Identify quick wins and technical blockers

Mark features that are quick to build and those that require deep changes or integrations. Quick wins get a higher chance for early inclusion. Technical blockers might force schedule shifts; treat them as risks and surface them early.

- Plan experiments

For features with high impact but low confidence, plan an experiment instead of a full build. Experiments can be manual workflows, landing pages, or concierge services that mimic the feature.

- Commit to a time-boxed scope

Decide how long you’ll spend on the MVP sprint and commit. Time-boxing prevents drift and forces prioritization. If something doesn't fit in the sprint, move it to the backlog and re-evaluate.

- Track a small set of metrics

Pick three metrics that map to the hypothesis and keep the dashboard tidy. If you measure everything, you learn nothing. Examples: activation rate, task completion, conversion to paid.

This process helps teams focus on building what matters and defers the rest until you have evidence.

Practical examples by industry

Let's make this less abstract. Here are simplified MVP scopes for three verticals. These examples show how to keep the first release lean while still delivering value.

Healthcare: Appointment reminders and provider dashboard

Hypothesis: automated reminders reduce no-shows by 20 percent and free up provider time.

Minimal features:

- User authentication with role-based access for providers

- Simple schedule import or CSV upload

- Automated SMS and email reminders with basic templates

- Provider dashboard that shows upcoming appointments and no-show rate

- Basic audit logs and HIPAA-aware data handling plan

What to skip initially: deep EHR integration, advanced scheduling optimization, and premium reminder personalization. You can simulate advanced integrations by asking providers to upload schedules manually while validating the reminder effectiveness.

Mortgage: Document collection and status tracking

Hypothesis: a streamlined document collection flow reduces time to underwrite by two days.

Minimal features:

- User onboarding for loan officers and borrowers

- Secure document upload and verification markers

- Simple checklist and status tracking per application

- Notifications for missing documents

- Audit trail for compliance

What to skip: full integration with underwriting engines, mortgage pricing engines, and complex credit automation. Instead, use a manual backend process for parts that are risky but not essential to prove the value.

Education: Assignment workflow and analytics

Hypothesis: a simplified assignment submission and teacher feedback loop increases submission rates by 15 percent.

Minimal features:

- Teacher and student sign-in with simple roles

- Assignment creation and submission

- Teacher feedback interface and simple gradebook

- Basic analytics showing submission rates and feedback lag

What to skip: full LMS interoperability, advanced plagiarism detection, and adaptive learning engines. You can fake some features with manual checks or third-party lightweight tools while proving core value.

Read More : Prompt Engineering Explained: Use Cases, Examples & Best Practices

Technical trade-offs that save time

Picking the right tech decisions is about trade-offs. You want something secure and reliable, but you also want speed. Here are a few practical tips.

- Use managed services for infrastructure. Offload ops work early to save time and avoid firefighting. Examples: managed databases, messaging, and auth providers.

- Favor focused data models. Avoid building a generic data engine. Model for the first use case and evolve later.

- Delay heavy integrations. If a third-party API is not required to validate the hypothesis, mock it or use a manual process.

- Build with a modular architecture. Small, well-scoped modules let you iterate quickly and replace components as you learn.

- Automate CI/CD, but start simple. A basic automated deploy pipeline is worth it. Full-blown pipelines can wait until you have regular releases.

For example, instead of wiring up a full single sign-on solution on day one, use an industry-standard auth service for MVP users. It’s secure, faster to implement, and you can swap in SSO later without a major refactor.

Validation tactics and what success looks like

After launch, your job is to learn fast. That means you need simple, measurable signals. I recommend picking three to five metrics tied directly to your hypothesis.

Examples:

- Activation: the percent of users who complete the key onboarding step within seven days

- Engagement: weekly active users or frequency of the core action

- Conversion: the percentage of users who move to a paid plan or a next-step conversion

- Retention: how many users return after 30 days

- Operational metrics: error rates, time to complete a workflow, support tickets

Don’t obsess over perfect instrumentation from the start. Instrument the core flows and get qualitative feedback with short interviews. I prefer three to five quick interviews per week for the first month. You’ll learn more from direct user stories than from raw numbers alone.

Common pitfalls during validation

Even after you launch, teams fall into traps that waste validation time. Watch out for these.

- Measuring the wrong thing. If your metric doesn’t map to the hypothesis, you won’t learn anything useful.

- Chasing edge case feedback. A vocal user is helpful, but don’t let one outlier derail the product direction.

- Ignoring operational costs. If the MVP needs manual work to run, track that cost. It matters for scaling decisions.

- Skipping baseline measurement. Know where you start so you can measure improvement.

One startup I coached launched an MVP with manual backend checks to approve documents. They didn’t track the time staff spent. Six weeks later, the manual work doubled operating cost and their unit economics looked bad. A quick measurement would have surfaced the problem earlier.

How to scale features after validation

Validation gives you two types of data: what users want and what’s feasible. Use both to plan scaling.

- Prioritize features that improve conversion and retention first. They drive growth.

- Move technical debt items that slow development to a prioritized backlog. Fix incrementally.

- Replace manual processes with automated flows that proven demand justifies.

- Introduce integrations and performance work once you have reliable usage and clear ROI.

Think of scale as a staged investment. Don’t automate everything at once. Replace manual steps one by one as demand and unit economics justify it.

How Agami Technologies helps

At Agami Technologies, we specialize in turning product hypotheses into lean, testable custom SaaS MVPs. In my experience, founders who engage a focused engineering partner ship faster to validation and avoid common mistakes that blow timelines and budgets.

We work with startups and non-technical entrepreneurs in healthcare, mortgage, education, and other verticals where compliance and domain knowledge matter. Our approach is simple: define the hypothesis, scope the minimal feature set, build with sensible technology choices, and help measure the outcome. We also help plan the transition from MVP to a scalable product without losing the lessons learned from early users.

If you want a realistic partner who understands both technology and the practical trade-offs of mvp product development and dev mvp work, we’ve done it many times. We’ll help you cut build time and focus on the outcomes that matter.

Small checklist to use right now

Here’s a short checklist you can use to prioritize your MVP features today. It’s the quick version of the process above.

- Write a one-line hypothesis for the product’s core outcome

- Map the one user journey needed to test that hypothesis

- List features required to enable each step of the journey

- Score features by impact and confidence using a simple 1 to 5 scale

- Label features as must, should, could, won’t

- Identify technical blockers and manual workarounds

- Time-box the first development sprint

- Pick 3 metrics and plan 3 user interviews per week after launch

Want the full checklist and a simple scope worksheet? Reply SCOPE for the checklist.

Real-world tips I wish someone told me earlier

A few practical lessons from building many MVPs with founders and CTOs.

- Start with a paper prototype. You’d be surprised how fast you can validate flows without code.

- Use feature flags. They let you toggle functionality in production without new deployments.

- Keep the first data model simple. Over-normalizing early can slow you down.

- Prefer pragmatic security. Use proven libraries and managed services rather than building custom auth from scratch.

- Budget 20 percent of your sprint for unexpected integration work. It always shows up.

I once worked with a team that wanted a perfect onboarding flow before any user saw the product. We shipped a minimal onboarding and then iterated based on real user confusion points. It saved six weeks and improved the flow more than any initial design workshop could have.

Who should be in the room during prioritization

Bring together people who can speak to user value, tech constraints, and business outcomes. The ideal group is small but diverse. My recommended list:

- Founder or product owner who knows the vision

- Technical lead or senior developer who understands constraints

- Designer who can translate features into simple flows

- Domain expert for regulated verticals like healthcare, mortgage, or education

- Someone who will own metrics and validation

Keep the meeting focused. One hour to set a baseline prioritization is usually enough if you come prepared with the hypothesis and a user journey sketch.

How to defend scope to stakeholders

Stakeholder pushback is normal. Here are a few lines I use when people ask why we’re not building a feature that seems important.

- "We can build that next, but only if the core metric moves." That ties feature requests to measurable outcomes.

- "We’ll validate the need with a test first. If users show demand, it moves up the list." This lowers perceived risk.

- "We can simulate that experience manually to learn faster." Stakeholders often forget manual approaches are valid for early validation.

Be clear about trade-offs. When you show the estimated time and impact side-by-side, stakeholders usually accept staged delivery.

Final thoughts

Prioritizing features for a lean custom SaaS MVP is less about tools and more about discipline. If you commit to a clear hypothesis, pick the smallest set of features that will test it, and measure the right things, you’ll learn faster and spend less. That learning is the real advantage of a well-scoped MVP.

In my experience, teams that focus on outcomes rather than features get to meaningful validation 40 to 50 percent faster. You don’t have to compromise quality or security to move quickly. You just need to be deliberate about what matters for the first release.

If you want help scoping an MVP, or if you’d like the full checklist and scope worksheet, reply SCOPE for the checklist. I’m happy to walk through your hypothesis and suggest a lean build plan.