AI Development Services That Transform Your Business In 2025: Complete Guide

Artificial intelligence stopped being something people were curious about a long time ago. Still, it is very much a part of product roadmaps, operational playbooks, and strategic planning. If you are a chief technology officer, vice president of engineering, a product manager, or a team leader, the pressure is on, and you know it: how can we go beyond pilots and proofs of concept to obtain stable, scalable AI that has a real impact on business results? I have seen the teams going through the phases of getting excited and then hitting a wall.

The scenario typically goes like this: they buy a cloud service, train a model, and expect that some magic will happen. It hardly ever works like that. Successful AI endeavours require a well-defined strategy for AI implementation, a proper balance of skills, and a detailed execution plan that addresses everything from data to deployment and continuous maintenance. This handbook is a breakdown of the AI development journey that you can follow in 2025. It could be seen as a dialogue with a colleague who has delivered models into production across sectors such as finance, healthcare, e-commerce, and manufacturing. You can anticipate getting some solid advice, learning about common pitfalls, and being provided with a checklist that you can use when conversing with vendors or hiring AI engineers.

Who should read this

- CTOs and VP of Engineering evaluating enterprise AI solutions

- Product managers planning AI features and roadmaps

- AI/ML engineers and data scientists building models and pipelines

- Tech-savvy startup founders and innovation leads

- Procurement teams and technology consultants sourcing AI development services

Skip ahead if you want the checklist or the vendor selection template. But if you have five minutes, read the next section: it will save you time and money.

Why 2025 is different — and what matters now

We are past the hype cycle where a model alone counted as innovation. Now it is about systems. In 2025, three changes matter most:

- Models are commoditized but integration is not. Pretrained models are everywhere. The competitive edge comes from tailoring models to your data and embedding them into your workflows.

- MLOps is required, not optional. Continuous delivery, monitoring, governance, and model lifecycle management are table stakes for enterprise AI.

- Cost and risk are operational concerns. Model compute, data privacy, explainability, and compliance determine whether a project scales.

I've noticed teams that focus on these three points early wind up shipping features faster. Teams that treat AI as a science project do not.

Types of AI development services you can hire

When you decide to get help outside, vendors mostly provide a mixture of these services. Understanding the differences allows you to ask the right questions.

AI consulting services — Leadership, research on feasibility, and creation of a roadmap. Suitable for coordination and getting a support from the executives.

Custom AI development — The construction of tailor-made models and software specifically for your data and cases.

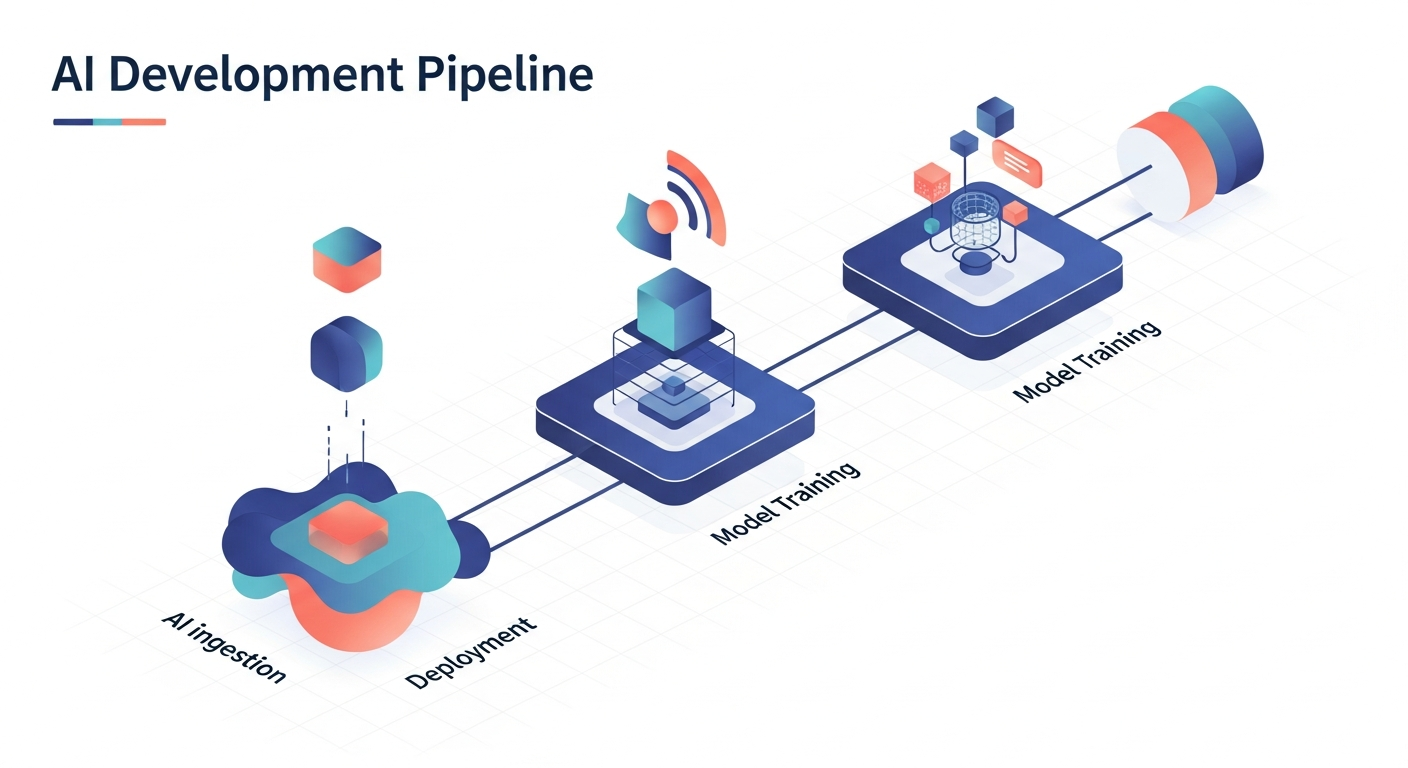

Machine learning development services — Data pipelines, model training, testing, and transfer learning.

AI integration services — Incorporating models into current systems, APIs, and user interface applications.

Enterprise AI solutions — Complete platforms designed for companies that require governance, scaling, and multi-team access.

AI model deployment and MLOps — CI/CD for models, monitoring, model versioning, and rollback mechanisms.

Neural network development — Deep learning projects, especially for computer vision, NLP, and speech. According to my experience, a majority of companies require a combination of these.

A finance company for instance, may start with AI consulting to define risk models, then proceed to custom AI development and finally use AI model deployment services to run models in production.

When to build custom AI vs use off-the-shelf

Do you require custom AI development or can you use a prebuilt model? Consider these questions:

Does your data illustrating your processes or signals that are not available in public datasets?

Would a generic model fulfill the regulatory, latency, or accuracy requirements?

Do you need explanation, traceability, or custom metrics for compliance?

If any of these questions got a yes from you, then most likely the development of a custom AI is the way to go. In case you are dealing with a typical problem like the sentiment analysis of reviews, then using an off-the-shelf model or simply fine-tuning a pre-trained model would be a faster and more affordable solution. Quite frequently: pre-trained models make the development faster, however, you still need to fine-tune them and deeply integrate them with your data and business rules.The AI development process — a practical, step-by-step workflow

I've worked on projects where teams skipped steps and paid for it later with outages, biased outputs, or staggering costs. Here is a simple process that balances speed and reliability.

- Discovery and alignment

Define the use case, success metrics, and constraints. Who are the users? What does success look like in revenue, time saved, or risk reduction? If you can't write success criteria in clear numbers, you don't have a project yet.

- Data assessment

Inventory available data, check quality, and identify gaps. Map where data lives, who owns it, and what permissions are needed. Don't assume data is clean. Expect missing labels, noisy columns, and schema drift.

- Proof of value

Build a lightweight prototype that proves the idea. Focus on a minimal model and a simple integration point. Keep it short. A two-week spike can reveal whether the core idea works.

- Model development

Iterate on algorithms, feature engineering, and model architecture. Track experiments and metrics; use reproducible pipelines. Decide early whether to use neural network development or simpler models.

- MLOps and deployment planning

Design the deployment path: batch versus real-time, latency, scaling, model retraining cadence, and rollback plans. Put monitoring and alerting requirements in place before deployment.

- Integration and productization

Embed the model into the product. This is where AI integration services matter. Think about UX, API design, and how outputs will be used by downstream systems or humans.

- Validation and governance

Run A/B tests, check for fairness and bias, and log decisions for audit. Include security and privacy reviews. Production is where compliance mistakes become expensive.

- Scaling and maintenance

Automate retraining, monitor data drift, and manage cost. Keep a backlog for model improvements and a playbook for incidents.

Notice how product and engineering needs intersect at every stage. If you ignore either side, you'll build an impressive model that nobody uses.

Data: the real engine of AI

People say AI is data hungry. That understates it. Better models often come from better data, not bigger models. Here’s what practical data work looks like.

- Start with a data map. Where are customer records, logs, telemetry, and third-party inputs? Who can grant access?

- Create a labeling strategy. Use human-in-the-loop systems for tricky labels and validate inter-annotator agreement.

- Address drift early. Deploy simple drift detectors so you can spot when model inputs change.

- Build feature stores for reproducibility. They save a ton of debugging time during deployment.

One common mistake: training on merged data that will never exist in production. If the test set includes labels that come from future user actions, the model will fail in real life. Guard against this by simulating production data during training.

Model choices: pick the right tool, not the shiniest one

Neural network development has exploded, and transformer architectures dominate NLP. But don’t default to deep learning for everything. Simpler models can be faster to ship and easier to maintain.

Ask these questions when choosing a model:

- Is interpretability important? If yes, rule-based systems or tree-based models might be better.

- What's the latency budget? Deep networks may need optimized inference stacks.

- What is the data volume? Deep models need more data to generalize well.

In my experience, a hybrid approach works well: use pretrained neural models for perception tasks, then wrap them with rule-based logic for business constraints.

MLOps and production readiness

MLOps is not a department. It is a set of practices that makes your model reliable and maintainable. Think of it as DevOps for models, but with extra steps for data and monitoring.

Key MLOps components:

- CI/CD for model training and deployment

- Model versioning and lineage tracking

- Automated unit tests for data and model behavior

- Monitoring for concept drift, performance, and data quality

- Canary deployments and rollback mechanisms

One thing teams miss: alerts for silent failures. A model might return valid outputs but stop improving business metrics. Set up automated alerts for metric deltas, not only for errors.

Deployment patterns

There are a few common deployment patterns. Pick the one that matches product constraints.

- Batch scoring — Good for offline analytics, billing, or bulk recommendations.

- Real-time API inference — Needed for personalization, fraud detection, and chatbots. Requires attention to latency and autoscaling.

- On-device inference — Useful for mobile apps and edge scenarios where latency or privacy matter.

- Hybrid — Combine on-device preprocessing with cloud inference for the best of both worlds.

I recommend starting with the simplest pattern that meets requirements. Real-time APIs are tempting, but they add ops complexity. If batch can deliver the same business value, use batch first.

Security, privacy, and compliance

You cannot treat these as an afterthought. In regulated industries like healthcare and finance, compliance shapes architecture choices.

- Document data lineage for audits. Know the source and transformations for every feature.

- Minimize PII exposure. Use tokenization and encrypted stores; consider differential privacy for analytics.

- Implement access controls and secure model endpoints. Treat models as part of your attack surface.

- Plan for explainability. Regulators and customers may ask how a decision was reached.

A common pitfall: teams launch models and only then realize they lack the logging required for audits. Build logging and traceability into the design from day one.

Cost considerations and how to estimate AI development cost

Let's be blunt: AI development cost varies. A tiny prototype costs a few thousand dollars. A full enterprise AI solution with strict SLAs and heavy data work can run into millions. Here are the levers that drive cost:

- Data engineering effort (cleaning, labeling, pipelines)

- Model complexity and training compute

- Integration work with legacy systems

- MLOps sophistication and monitoring needs

- Compliance, security, and audit requirements

For more predictable budgeting, break work into phases: discovery, MVP, production, and scale. Each phase has clear deliverables and success metrics. This staged approach lowers risk and gives you checkpoints to reassess investment.

Typical pricing models from vendors:

- Fixed-price engagements for clearly defined MVPs

- Time and materials for exploratory work

- Retainers or managed services for ongoing MLOps and support

When evaluating quotes, compare apples to apples. A low-cost vendor might omit monitoring or retraining responsibilities, which costs more later.

Hiring: how to hire AI developers and build an internal team

Hiring is tricky in 2025. The roles you need depend on your goals. Here’s a practical team composition I've used:

- Data engineers to build pipelines and feature stores

- ML engineers to productionize models and implement MLOps

- Data scientists for experimentation and model design

- Product engineers and UX designers to integrate AI into products

- Security and compliance specialists for regulated deployments

When you hire, test for practical skills. Ask candidates to review a real dataset, sketch a simple pipeline, or explain trade-offs for model deployment. Pure research papers impress less than pragmatic skills that match your stack.

Outsourcing vs. in-house: I usually recommend a hybrid model. Keep core IP and strategic parts in-house, and use an AI software development company for acceleration and specialist expertise.

Choosing a vendor: what to ask and what to watch for

Selecting the right partner can make or break your program. Here are questions that separate competent vendors from the rest:

- Do you have domain experience relevant to our industry?

- Can you show production case studies and measurable business outcomes?

- How do you handle data security, governance, and IP?

- What does your AI development process look like?

- How do you price deployments, maintenance, and scaling?

- Who will transfer knowledge to our team? What training is included?

Beware of vendors that over-promise accuracy without providing evaluation protocols or holdback test sets. Ask for reproducible benchmarks and clear KPIs tied to the business.

Common mistakes and how to avoid them

I could write a book on this, but here's the short list of traps I see most often.

- No success metric — Projects without clear KPIs drift into vanity metrics. Define business impact up front.

- Data problems ignored — Assuming good data leads to rework. Allocate at least 40 percent of effort to data tasks for serious projects.

- Overfitting to benchmarks — Models tuned to historical datasets often fail in production. Validate on realistic, recent data.

- No retraining plan — Models degrade. Schedule retraining and define triggers based on drift monitoring.

- Embedding models without UX changes — A great model with poor UX has low adoption. Co-design with product teams.

- Ignoring costs — Inference bills can surprise you. Estimate operational costs during architecture design.

If I had to give one short piece of advice: measure outcomes, not model accuracy. Business impact keeps you honest.

Case snapshots: realistic examples

Here are short, anonymized examples that show different approaches and outcomes. They are deliberately simple so you can spot the patterns.

- Retail personalization — A mid-size retailer used fine-tuned recommender models and simple rules to boost email conversion by 18 percent in three months. They started with an MVP that scored on past purchases and iterated to include browsing sessions. Key win: fast integration with the existing marketing stack.

- Fraud detection for payments — A payments provider deployed a real-time API with a lightweight ensemble model. The model reduced false positives by 25 percent and saved analysts time. Tip: optimizing for latency and explainability mattered more than squeezing a tiny accuracy gain.

- Healthcare readmission prediction — A hospital system built a model to predict readmissions. The team focused on data lineage, clinician workflows, and regulatory logs. Result: a 12 percent reduction in avoidable readmissions after integrating model suggestions into discharge planning.

Each project followed the same pattern: clear metrics, short MVP, integration focus, and a plan for operations.

Tools and frameworks that matter in 2025

You won't get far without a practical toolset. Here are categories and examples, but focus more on capability than brand:

- Data platforms — Feature stores, data lakes, and workflow orchestrators

- Modeling frameworks — PyTorch, TensorFlow, JAX, and high-level libraries

- Pretrained models and model hubs — Use them for transfer learning and acceleration

- MLOps tools — CI/CD, model registries, monitoring, and deployment platforms

- Inference runtimes — Optimized inference libraries for latency and cost control

Pick tools that integrate with your cloud provider and team skills. A shiny new framework can slow you down if no one knows it. In my experience, standardizing on a small set of tools and adding integrations as needed beats trying to use everything at once.

Measuring success: KPIs that matter

Don't measure only model metrics. Here are KPIs to track across the lifecycle:

- Business KPIs: revenue lift, cost savings, churn reduction

- Product KPIs: adoption rate, user satisfaction, task completion time

- Model KPIs: precision, recall, AUC, but contextualized to business impact

- Operational KPIs: mean time to restore, model latency, inference cost per request

- Data KPIs: percent of missing data, labeling throughput, drift rates

Set SLAs for operational KPIs so teams know when to act. For example, a drift alert that triggers when model accuracy drops by 5 percent is more actionable than a vague "watch performance" note.

Scaling AI across the enterprise

Scaling is often cultural as much as technical. When you move beyond a single use case, you will face governance, reuse, and prioritization challenges.

Practical steps to scale:

- Create an AI platform team responsible for tools, templates, and guidelines

- Build shared feature stores and model registries to reduce duplicated work

- Set a clear approval process for new AI projects, focusing on impact and feasibility

- Offer training and playbooks for product teams to integrate AI responsibly

I've seen companies waste cycles when each team invents its own pipelines. Centralize the plumbing and decentralize the product decisions.

Procurement and contracts: negotiate smarter

Buying AI development services is different from buying software licenses. Negotiate around outcomes, ownership, and support.

- Define deliverables and acceptance criteria clearly in the SOW

- Clarify IP and model ownership. Does the vendor keep a copy of the model or data?

- Include post-deployment support, monitoring, and retraining responsibilities

- Use milestone payments tied to measurable results, not just time spent

If you plan to work with consultants, require reproducible notebooks and clear documentation. If a vendor claims a secret algorithm, insist on independent validation or a transparent evaluation protocol.

Roadmap template: 90-day plan for an AI MVP

If you want a quick plan to kick off an AI project, here is a pragmatic 90-day roadmap I've used with teams:

- Days 1-14 — Discovery, stakeholder alignment, and data mapping. Deliverable: project charter and success metrics.

- Days 15-30 — Data extraction, sample labels, and initial prototype. Deliverable: working notebook and baseline metrics.

- Days 31-60 — Model iteration, infra selection, and integration plan. Deliverable: MVP model and API spec.

- Days 61-90 — MLOps setup, validation, A/B test, and launch plan. Deliverable: production-ready model with monitoring and rollback playbook.

This timeline assumes a small, focused team and well-scoped problem. Adjust the cadence for regulatory reviews or extensive labeling needs.

How Agami Technologies can help

At Agami Technologies, we focus on delivering practical enterprise AI solutions that align with business goals. We combine AI consulting services, custom AI development, and managed MLOps so you can move from prototype to production without the typical pitfalls.

We often work with clients on AI implementation strategy, AI model deployment, and AI integration services. If you want a partner to accelerate your roadmap, we help prioritize use cases, build MVPs, and set up operational frameworks for long-term success.

Helpful checklist before you start conversations

- Define 1 to 3 clear business outcomes and measurable KPIs

- Have a data inventory and identify missing labels

- Decide whether latency, explainability, or compliance will constrain design

- Choose a deployment target: batch, real-time, device, or hybrid

- Allocate budget for ongoing ops and retraining, not just initial development

- Plan for knowledge transfer and documentation from any vendor engagement

Common questions clients ask

Here are quick answers to frequent questions I see during scoping calls.

- How long does it take to build an MVP? Typically 6 to 12 weeks for a focused use case. More complex, regulated projects take longer.

- How much does AI development cost? Cost varies with scope. Expect prototypes in the low five figures and enterprise solutions in the high five to seven figures over multiple years. Break your budget by phase.

- Should we hire or partner? If you need to move fast, partner. If the capability is core IP, invest in hiring. Often the best route is a hybrid approach.

- What tools should we standardize on? Pick a small stack aligned to your cloud provider and skills. Prioritize reproducibility and deployment compatibility.

Final thoughts: start structured, iterate fast

AI development in 2025 rewards structure and pragmatism. Start with clear outcomes, invest in data and MLOps early, and prioritize integration with product workflows. Expect surprises and build a playbook for when things break.

If you keep one idea from this guide, let it be this: measure impact before scale. A small model that shifts a key metric is worth more than a cutting-edge architecture that never gets used.

Helpful Links & Next Steps

FAQs

What is the difference between custom development and pre built models?

Answer Custom development uses your own data and business needs to build a tailored solution. Pre built models are general systems you fine tune for your use case. Go custom when you need higher accuracy, compliance, or unique logic. Pre built is faster and great for common tasks. Many teams combine both.

How much should I budget and what drives the cost?

Answer Budgets range from low thousands for small prototypes to much higher for full enterprise systems. The biggest cost factors are data setup, complexity of the system, integration with existing tools, monitoring, and security needs. Split the work into clear phases so investment grows with results.

When should I outsource instead of building in house?

Answer Outsource when you need speed, special skills, or temporary capacity. Build in house when the system is strategic, long term, or involves sensitive data. Many companies start with a partner and transition ownership later.

What is production operations and why is it important?

Answer Once the system is live, results can fade if not tracked. Production operations include monitoring performance, updating models, and ensuring data quality. This protects business value and avoids silent failures.

How do I know if my data is ready?

Answer Check if you have enough coverage of real world scenarios, clean and consistent data, proper access in production, and a close match to real conditions. Start with a data review to avoid delays later.

How long to get a system into production?

Answer A focused first version usually takes 6 to 12 weeks with clear scope and available data. Larger or regulated projects can take many months depending on complexity.

How do I choose between batch, real time, or on device?

Answer Batch is best when instant results are not needed. Real time supports fast decisions and live user interactions. On device helps with privacy or poor network. Pick the simplest choice that meets the product need.

What are the common mistakes that cause failure?

Answer No clear success goals. Ignoring data cleanup. Tuning only for old data. No plan for updates. Poor user experience. Unexpected operating cost. Always measure business outcomes not just technical scores.

How do I select the right development partner?

Answer Look for proven results in your industry. Confirm strong security and ownership clarity. Ask for a transparent process with real checkpoints. Ensure they commit to knowledge transfer and long term support.

What KPIs should I track beyond accuracy?

Answer Focus on revenue impact, cost savings, and customer outcomes. Track user adoption and trust. Monitor performance, reliability, and cost per use. Keep an eye on data quality over time. Business value is the real measure.