AI-Assisted Software Development: A Comprehensive Guide with Practical Prompts

AI is no longer a novelty in engineering teams. It is changing how we design, build, test, and run software. In this guide I walk through practical ways to adopt AI-assisted software development, share ready-to-use prompts, point out common pitfalls, and suggest workflows that scale from startups to enterprises. I've worked with product teams and engineering leaders who wanted practical advice, not hype. This is that advice.

Why AI-Assisted Software Development Matters

Let's be honest. The day to day of software development is full of repetitive tasks. Boilerplate code, testing edge cases, and reviewing pull requests take time away from solving the core business problems. AI-assisted software development helps teams move faster by automating routine work, surfacing better decisions earlier, and enabling developers to focus on higher value tasks.

From my experience, the most tangible benefits are faster time to market, fewer regressions, and more consistent code quality. For enterprises, combining AI with strong engineering practices results in scalable software development that supports long term growth. For startups, it helps teams punch above their weight.

Keywords to keep in mind as you read: enterprise AI solutions, AI in software engineering, AI coding tools, AI-driven automation, machine learning development, and scalable software development.

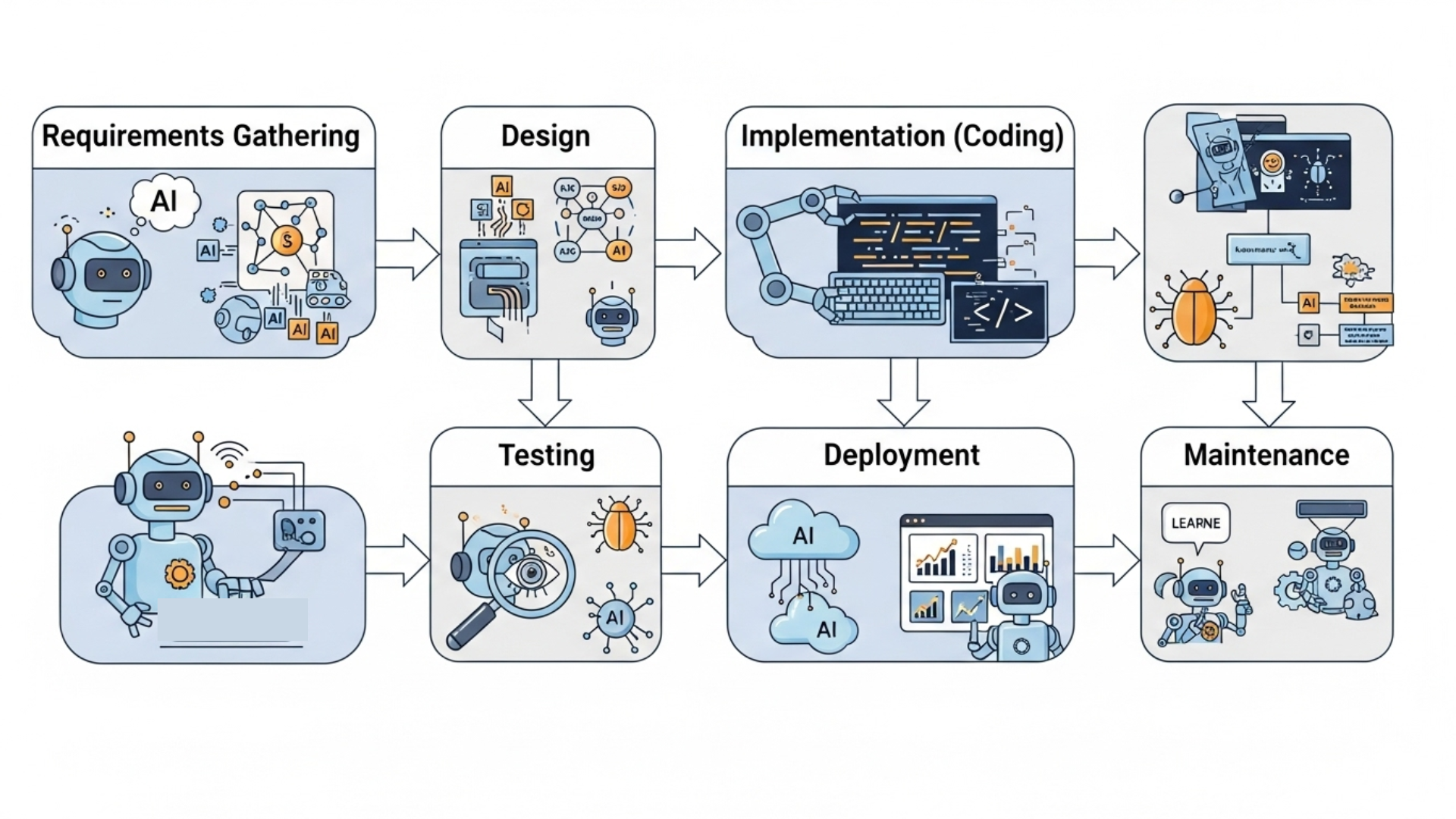

How Teams Use AI Across the Development Lifecycle

AI can plug in at almost every stage. Here are the common entry points and what they practically look like.

- Idea to specification. Use AI to draft user stories, acceptance criteria, API contracts, and sequence diagrams. It saves time and reduces ambiguity.

- Design and architecture. Generate architectural options, list tradeoffs, and evaluate scalability risks. AI helps you iterate designs faster.

- Development. AI coding tools speed up implementation, suggest idiomatic patterns, and create unit tests for tricky edge cases.

- Code review and security. Automated code reviews, vulnerability scanning, and suggested fixes reduce human review load and improve reliability.

- Testing and QA. Generate test cases, fuzz inputs, and write integration tests that cover edge cases you might miss.

- Deployment and operations. Use AI to tune deployment configurations, estimate resource needs, and detect anomalies in production.

- MLOps and model lifecycle. For machine learning development, AI supports model training automation, data drift detection, and CI for models.

Practical AI Prompts for Different Roles

Prompts are the multilingual glue between people and AI. Below are practical prompts I use with engineering teams. They are simple, repeatable, and can be adapted to your codebase or domain. Try them in your preferred AI coding tool.

Prompts for Developers

-

Given this function (paste code), suggest three refactorings to improve readability and performance. Explain the tradeoffs and provide the updated code. -

Write unit tests for this API endpoint (paste controller). Cover success cases and the five most likely error conditions. Use pytest and simple fixtures. -

Review this pull request summary and list potential security issues and missing tests. Provide concise suggested changes I can paste into the PR comments.

Prompts for Engineering Managers and Architects

-

Compare these two architecture options for a multi-tenant SaaS service: shared database vs separate databases. List pros and cons focusing on cost, scalability, and operational complexity. -

Given our constraints (low latency, 99.95 uptime, on-prem data residency), propose an infrastructure diagram and a rollout plan that minimizes downtime. -

Create a migration checklist from monolith to microservices. Include code, data, testing, and monitoring steps for a 6 month plan.

Prompts for Product Managers

-

Write a clear API contract for a "user preferences" service supporting feature flags and versioning. Include request and response examples and error codes. -

Draft acceptance criteria and test cases for a new file upload feature that supports 5GB files, resumable uploads, and virus scanning. -

Summarize the main UX tradeoffs for adding AI-driven code completion to an IDE for internal developers. Suggest rollout metrics to track adoption and ROI.

Prompts for Data Scientists and ML Engineers

-

Outline an MLOps pipeline for a recommendation model, including data collection, feature store, training, validation, deployment, and drift monitoring. Suggest tools and a simple CI/CD flow. -

Given an imbalanced dataset with 2% positive cases, recommend sampling strategies, metric choices, and model evaluation steps to make fair decisions.

Example Workflows You Can Start With

Here are workflows that I often recommend. They are incremental, so teams can adopt them without huge upfront cost.

1. AI-Assisted Developer Workflow

Start small. Let developers adopt AI coding tools individually. Encourage them to use AI for test generation, code snippets, and boilerplate. Pair this with a simple policy: always review AI suggestions and run tests locally before committing.

Toolset example: code LLM in IDE, CI with unit tests, SAST tool in pipeline.

2. CI/CD with AI Checks

Add AI-based linting and security scans to your CI pipeline. When a pull request is opened, the pipeline runs automated checks that include:

- Style and linting

- Vulnerability detection

- Automated unit and integration tests

- AI-summarized PR description and change impact

This saves reviewers time because they get a concise summary and flagged risks up front.

3. MLOps Pipeline for Machine Learning Development

Machine learning development has its own lifecycle. Set up a pipeline that automates data validation, model training, evaluation, and deployment. Add model monitoring to detect drift and automate rollback if performance drops.

Start with a single model in production and expand once you have reliable CI for models. I recommend keeping model artifacts and feature metadata under version control using a feature store.

Scaling AI Integration Across the Enterprise

Scaling AI from a team experiment to an enterprise capability requires structure. Here are the pieces I’ve seen work.

- Governance. Define policies for data usage, privacy, and model approvals. Keep a central catalog of models and their owners.

- Platform. Provide shared tools and APIs for common needs, like embeddings, text generation, and code analysis.

- Templates and prompts. Standardize prompts and templates so outputs are predictable and auditable.

- Observability. Track performance, costs, and usage so you can measure ROI and identify trouble spots.

Without these, you will see fragmentation, duplicated effort, and unpredictable costs.

Security, Compliance, and Intellectual Property

Security and compliance are non negotiable, especially for enterprise AI solutions. I’ve noticed teams often underestimate the legal and data risks when they first introduce AI coding assistants.

Here are practical considerations:

- Data privacy. Don’t send sensitive customer data or proprietary source code to external APIs without reviewing the vendor’s data handling policies.

- Access control. Gate AI tools behind identity controls and logging so you know who asked what and when.

- IP risks. Use models that are cleared for commercial use or operate them privately. Keep a record of generated outputs used in production.

- Regulatory. If you operate in regulated industries, add additional approval steps for model usage and outputs.

Monitoring, Metrics, and Measuring ROI

People love AI promises. The reality is you need concrete metrics. Choose a few that matter and measure them consistently.

Operational metrics

- Build and deploy frequency

- Lead time from commit to production

- Mean time to recover

- Defect rate in production

AI-specific metrics

- Time saved per developer per sprint

- Reduction in review time

- Model performance and drift metrics

- Cost per inference or per model deployment

Tracking these will help justify continued investment and guide where AI-driven automation should expand.

Common Mistakes and How to Avoid Them

Adopting AI is not plug and play. You will hit some predictable bumps. Here are things I see often and how to avoid them.

- Relying on AI blindly. Mistake: accepting suggestions without review. Fix: require human verification, include unit tests, and run static analysis.

- Ignoring costs. Mistake: spinning up many model calls without tracking the spend. Fix: add cost monitoring and optimize prompts, batching, or caching.

- No governance. Mistake: different teams use different models and secrets randomly. Fix: centralize policies, maintain an internal model registry, and control access.

- Underestimating data needs. Mistake: expecting great models without good domain data. Fix: invest in labeled data, data quality, and feature engineering.

- Overengineering early. Mistake: building a complex platform before proving value. Fix: iterate with pilot projects, measure results, then scale.

Tools and Tech Stack Recommendations

You do not need to use every new tool. Pick tools that match your needs and workflow. Below are categories and examples you can consider.

- AI coding assistants. Use for local productivity and pair programming. Examples include code-completion LLMs and IDE plugins.

- Model orchestration and MLOps. Tools that automate training, deployment, and monitoring for machine learning models.

- Security and code quality. Static analysis and dependency scanning integrated with your CI.

- Feature stores and data registries. For reproducibility in machine learning development.

- Prompt management platforms. Store and version prompts and templates so teams share best practices.

Pick vendors and open source software that let you operate securely, especially if you are building enterprise AI solutions.

Sample Prompts You Can Copy and Paste

Here are ready-made prompts that have worked for engineering teams. Tweak them to match your stack and coding style.

Prompt: "Refactor and improve this Go function for clarity and performance. Keep the public API identical. Highlight changed lines and explain why these changes help. Include unit tests."

Use this when you want safer refactors that preserve behavior.

Prompt: "Generate end-to-end tests for the checkout flow described below. Include happy path and five negative tests. Use Playwright or Selenium style pseudo code."

Good for QA teams who want quick, actionable tests to paste into CI.

Prompt: "Given this SQL query and table schema, suggest indexes and query rewrites to lower average response time. Provide estimated complexity and any side effects."

Helps reduce DB load without guessing.

Architecture Patterns for AI-Enhanced Systems

When AI becomes part of your product, architecture decisions matter. These patterns come up repeatedly.

1. AI as a Service Layer

Abstract AI capabilities behind service APIs. Your product calls a single internal AI gateway that handles routing, caching, and policy checks. This centralizes governance and lets you switch underlying models without changing product code.

2. Hybrid Inference

Run light models on edge devices for low latency, and heavier models in the cloud for complex tasks. Route requests based on latency ai development cost. It is practical for features like smart autocomplete or on-device personalization.

3. Feature Store and Model Registry

Store feature definitions and model artifacts in a single place. Make reproducibility a first class concern. This pattern reduces surprises when retraining models or debugging production behavior.

Realistic Cost Considerations

Everyone asks about costs. There are three areas to budget for.

- Compute. More inference and training means more cloud spend. Optimize by batching requests, caching, and lowering model precision where possible.

- People. You need engineers and ML specialists to integrate AI safely and effectively. Plan for training and time to maintain the system.

- Tooling and governance. Logging, monitoring, and security tooling add cost but reduce risk.

Measure your cost per useful outcome. I find the easiest wins are replacing repetitive manual work and automating tests. That gives quick ROI and helps fund broader AI initiatives.

Quick Case Example: Adding AI to a Developer Portal

Imagine a developer portal for internal APIs. You want to introduce AI-assisted documentation and code snippets to improve onboarding.

Step by step:

- Start with a pilot on one set of APIs. Use an AI model to generate usage examples based on the OpenAPI spec.

- Share the generated snippets with a small group of developers and collect feedback.

- Integrate approvals so maintainers can edit examples before publication.

- Measure time to first successful call for new developers. If it improves by 20 to 30 percent, expand to more APIs.

Common mistake: deploying generated docs without a human check. That leads to inaccurate examples and lost trust. Always validate before publishing.

Human Factors and Change Management

Technology is only part of the story. Change management is where projects succeed or fail. In my experience teams that communicate wins, run training sessions, and provide clear usage guidelines adopt AI faster.

Tips that work:

- Run short lunch and learn sessions showing real examples.

- Create a small champions group that helps others adopt AI tools.

- Collect feedback and iterate on prompts and templates.

Checklist to Start Your AI-Assisted Development Journey

Here is a practical checklist to get started quickly.

- Choose a small pilot project that has clear success metrics.

- Pick a single AI coding tool and integrate it in the IDE or CI.

- Define governance rules for data and model usage.

- Create templates and a prompt library for repeatable tasks.

- Measure developer time saved and impact on cycle time.

- Document a rollout plan based on results and lessons learned.

Final Thoughts and Recommendations

AI-assisted software development is about amplifying human capability, not replacing one. The best results come from teams that treat AI as a collaborator with guardrails. Start small, measure everything, and scale what works.

If you are thinking of enterprise AI solutions, focus on governance, reproducibility, and cost control early. Those might not be glamorous, but they prevent costly mistakes later.

I've worked with leaders who expected overnight transformation. It rarely happens. What does work is incremental improvements, strong engineering practices, and a clear connection to business outcomes.

Helpful Links & Next Steps

- Agami Technologies Pvt Ltd

- Agami Technologies Blog

- Transform your business with AI-powered software solutions – Book a free consultation today!

If you want help building AI-driven software or piloting AI-assisted development, reach out. At Agami Technologies Pvt Ltd we help businesses design scalable AI software solutions and bring practical machine learning development into production. Book a free consultation and we can sketch a roadmap together.